How do I create an analysis using python (crandas)?

Once other users participating in the collaboration have imported datasets into the engine, you can get started to analyze this data! Once you are familiar with the approval flow, this article will help you to actually record and run your analysis.

1. Validate that you are assigned the role of analyst in the design and authorized environment

2. Complete the steps to download your keypair and the connection file, in both the design and the authorized environment.

3. Initialize your local Python installation and install crandas

4. In Python, you can now design a script on dummy data, using either a dummy data csv, a dummy DataFrame or the handle of a table that was imported to the design platform. All three options are shown in the code snippet below.

You only need to do one of these options

import crandas as cd

# Option 1 - Create a Dummy Dataframe with the same columns as the original data

dummy_data1 = cd.DataFrame({'Fruit':['apple','pear','banana','apple'],

'Prijs':[2,3,4,2]})

# Option 2 - upload a csv of dummy data to use

dummy_data2 = cd.read_csv('PATH/TO/dummy_data.csv')

# Option 3 - refer to a table handle from the design platform

dummy_data3 = cd.get_table('TABLE_HANDLE')

Once you are done designing your analysis on dummy data, you can run it on production data using the authorized environment. There are three types of data sources that can be analyzed. For each of these data sources, the explanation is shown here. An elaborate tutorial with some examples on how to analyze imported datasets or surveys can be found in the crandas documentation.

Recording a script

5. A script can be recorded by running either of the following code snippets, depending on whether you are processing data from a data import, a survey or a data request. These code snippets are also shown on the design platform for each of those data sources, where the values for the design variables are automatically filled in. For data requests, the code snippet is slightly different since the number of input datasets can be more than one.

Data import/survey:import crandas as cd

# copy from design platform:

DESIGN_ENV = ""

DUMMY_HANDLE = ""

# copy from authorized platform:

AUTH_ENV = ""

ANALYST_KEY = ""

PROD_HANDLE = ""

SCHEMA = {''}

# fill this in yourself:

ANALYSIS_NAME = ""

cd.script.reset()

cd.connect(DESIGN_ENV, analyst_key=ANALYST_KEY)

cd.get_table(DUMMY_HANDLE).save(dummy_for=PROD_HANDLE)

script = cd.script.record(name=ANALYSIS_NAME, path=ANALYSIS_NAME + '.recording')

table = cd.get_table(PROD_HANDLE, schema=SCHEMA)

## add any analysis on `table`

script.end()

Data request:

import crandas as cd

import pandas as pd

# copy from design platform:

DESIGN_ENV = ""

DESIGN_HANDLES = []

# copy from authorized platform:

AUTH_ENV = ""

ANALYST_KEY = ""

SCHEMA = {}

production_metadata = pd.DataFrame({

"handle": [],

"date_uploaded": [],

"owner": []})

# fill this in yourself:

ANALYSIS_NAME = ""

cd.script.reset()

cd.connect(DESIGN_ENV, analyst_key=ANALYST_KEY)

# Map production table handles to design table handles

table_dict = dict(zip(production_metadata["handle"].tolist(), DESIGN_HANDLES))

# Link each dummy table to its production handle

for handle in production_metadata["handle"].tolist():

cd.get_table(table_dict.get(handle)).save(dummy_for=handle)

script = cd.script.record(name=ANALYSIS_NAME, path=ANALYSIS_NAME + '.recording')

# Concatenate the uploaded tables into a single table

tables = [cd.get_table(handle, schema=SCHEMA) for handle in production_metadata["handle"].tolist()]

table = cd.concat(tables)

## add any analysis on `table`

script.end()

Variables

The values for the capitalized variables on top can be found as follows:

DESIGN_ENV:

The name of the design environment. Is the same as the name of the connection file, downloaded from the design platform, without the '.vdlconn' at the end.

AUTH_ENV:

Similar to DESIGN_ENV. The name of the authorized environment.

DUMMY_HANDLE:

Handle of the dummy table, corresponding to the production table to be analyzed. The easiest way to access this handle, is to import the dummy dataset as a table to the design platform and copy the handle of the imported table.

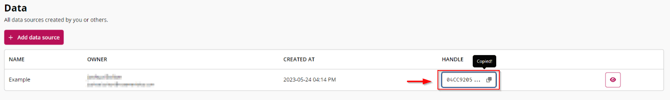

Tip: You can copy the handle easily by clicking the handle associated with the dataset you want to access through crandas.

Note that, when the dataset you want to analyze is a survey, there are two ways to import dummy data and access the dummy handle:

- Generate a dummy dataset in the same structure as the survey, and import it either via the design platform or directly in the script.

- Generate an identical survey in the design platform, and respond to it at least once. Then copy the handle of the generated response table.

PRODUCTION_METADATA:

Handles and metadata of the production tables, when these are imported via a data request. These can be copied from the authorized platform when the data request has been answered.

DESIGN_HANDLES:

To record a script that processes data that was imported via a data request, each of the handles in PRODUCTION_METADATA should be linked to an individual dummy table that is imported to the design environment. Therefore, the number of table handles in DESIGN_HANDLES should be the same as the number of handles in PRODUCTION_METADATA. If the data request was answered multiple times in the authorized environment, there are two options:

- Let the data request in the design environment be answered multiple times as well

- Import multiple dummy tables into Roseman Labs, either by means importing them directly via the design script or by importing them via the design platform.

In either case, the dummy table handles need to be copied manually into DESIGN_HANDLES.

ANALYST_KEY:

The name of the private key that you downloaded at account initialization. If the key was saved at the default location, it can be accessed from here by just copying the complete filename.

ANALYSIS_NAME:

The name of the analysis, and the filename of the recording. To be chosen by the analyst (preferably without spaces).

This name should also be entered when requesting approval via the platform:

PROD_HANDLE/SCHEMA:

Handle and schema of the (sensitive) production dataset to be analyzed. Depending on the type of data source, this can either be a data request, a survey or an imported dataset. For each option, the steps are show below:

5.1 Data request

In case the production dataset is a data request, the schema of the dataset can be accessed as follows:

5.1.1. Send out a data request, and wait for its response.

5.1.2. Select the eye icon on the sent data request.

5.1.3. The schema can be found in the code snippet.

For information about data types, please check out this page. In case missing values are allowed in a column, add a ? behind the data type (e.g. 'int?')

5.2. Survey

In case the production dataset is a survey, the handle and schema of the dataset can be accessed as follows:

5.2.1. Navigate to the surveys tab and send out a survey, wait for the survey to be filled in by participants.

5.2.2. Hit the eye-icon to navigate to the survey view

5.2.3. The schema can be found in the code snippet.

In surveys, the column names are automatically formatted as 'questionX'

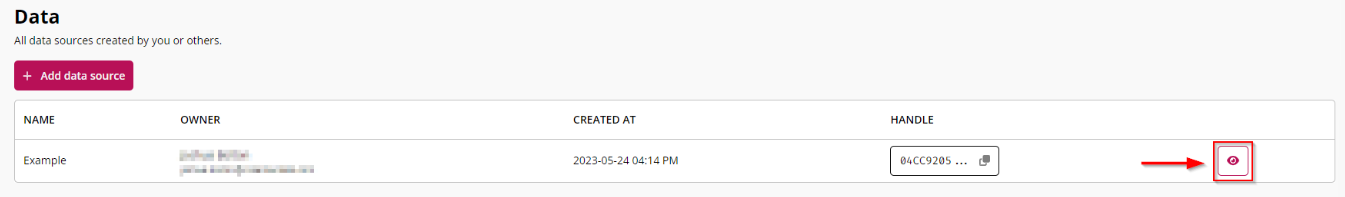

5.3. Imported dataset

5.3.1. Navigate to the "Data" tab on the left sidebar. Identify which dataset you would like to access and click the "Eye" button on the right of the row.

5.3.2. Once you have identified the dataset that you wish to use, you copy the handle for the dataset.

5.3.3. The schema can be found in the code snippet at the bottom of the page.

Recording the script

6. When all of the capitalized variables have been filled in, the analysis can be recorded by running the Python script.

7. This will produce the resulting RECORDING-file in your local filesystem.

8. We can then go back to the authorized platform and create a new analysis that needs to be approved. We then import the RECORDING-file that we have just generated. This recording file will include your Python script.

The recording will include only the Python code between the .record() and .save() commands. It will also include the output that was produced by the dummy data during recording to assist the approvers.

9. Once the approver has approved your script, you can download the resulting APPROVED- file and execute on the real data.

Running the approved script

10. Now go back to the Python environment and move the APPROVED-file to the same directory. If you run Python in the cloud, for example in a segregated jupyter-notebook, you can upload the APPROVED-file by clicking the indicated icon.

11. Then running the following code will run the approved script, and only that particular script, in authorized mode on the sensitive data. The capitalized variables remain the same.

Don't forget to restart the Python kernel before (re)running the approved script

Data import/survey:

# copy from design platform:

DESIGN_ENV = ""

DUMMY_HANDLE = ""

# copy from authorized platform:

AUTH_ENV = ""

ANALYST_KEY = ""

PROD_HANDLE = ""

SCHEMA = {''}

# fill this in yourself:

ANALYSIS_NAME = ""

import crandas as cd

cd.script.reset()

cd.connect(AUTH_ENV, analyst_key=ANALYST_KEY)

script = cd.script.load(ANALYSIS_NAME + '.approved')

table = cd.get_table(PROD_HANDLE, schema=SCHEMA)

## add the same analysis on `table` as was recorded

script.end()

Data request:

import crandas as cd

import pandas as pd

# copy from design platform:

DESIGN_ENV = ""

DESIGN_HANDLES = []

# copy from authorized platform:

AUTH_ENV = ""

ANALYST_KEY = ""

SCHEMA = {}

production_metadata = pd.DataFrame({

"handle": [],

"date_uploaded": [],

"owner": []})

# fill this in yourself:

ANALYSIS_NAME = ""

cd.script.reset()

cd.connect(AUTH_ENV, analyst_key=ANALYST_KEY)

script = cd.script.load(ANALYSIS_NAME + '.approved')

# Concatenate the uploaded tables into a single table

tables = [cd.get_table(handle, schema=SCHEMA) for handle in production_metadata["handle"].tolist()]

table = cd.concat(tables)

## add any analysis on `table`

script.end()

Hopefully this has explained how you can access and analyze a dataset using crandas!

If you want to learn more about crandas - click here to visit our documentation.

Thank you for taking the time to read this article. If you have feedback or need more information on specific topics, feel free to leave your comments below or reach out to support@rosemanlabs.com.